Whether you’re a pentester looking to gain some experience in mobile hacking or a developer aiming to build secure apps, familiarizing yourself with some of the common security mistakes developers make will serve you well. I’ve listed and summarized here the five most common types of mobile app security flaws that I’ve seen in the real world. I’ll describe each of the flaws, their impact, and likely reasons behind why they may appear so frequently. Of course, this list is solely based off of my own experience and is not backed by any official statistics.

#5 SSL Flaws / Man in the Middle

Both Android and iOS have built-in certificate handling code, but when app developers decide to write their own implementation there’s a decent chance they’ll have made a mistake. If the app doesn’t properly validate certificate chains and their signatures, the attacker could trick the app into accepting a certificate impersonating the app’s server. In the past, I wrote up a post diving deeper into the causes and impact of such a mistake, though it is focused on iOS apps only: Exploiting SSL Vulnerabilities in Mobile Apps.

Certificate handling flaws can lead to man-in-the-middle vulnerabilities that allow attackers to eavesdrop on or modify communications. These SSL bugs usually occur when developers decide to implement their own custom certificate handling code, perhaps because there is some missing functionality in the native libraries that they want to use. Other times, they can happen when developers use more lax network security settings during the development and testing phases and forget to change those settings back in the production app.

The tweet below discusses some research that discovered rampant SSL implementation flaws in banking apps:

Critical Implementation Flaw Left Major Banking Apps for Android and iOS Vulnerable to Man-in-the-Middle Attacks Over SSL/TLS — Even if SSL Pinning Is Enabled.https://t.co/Ej0jb5REb1

— The Hacker News (@TheHackersNews) December 8, 2017

HSBC, NatWest, Co-op, Santander Were Among Affected Banking Apps.

#4 Outdated Vulnerable Frameworks

You’ve all probably seen articles out there about the latest framework that’s had to rush to patch an ugly vulnerability. It happens fairly often for both iOS and Android frameworks. The owners of these frameworks typically will try to alert developers and advise that they update immediately – but those developers don’t always pay attention. Checking that an app isn’t using any known vulnerable version of a third-party framework is a simple task, and depending on the framework in question, could lead quickly to a high impact bug.

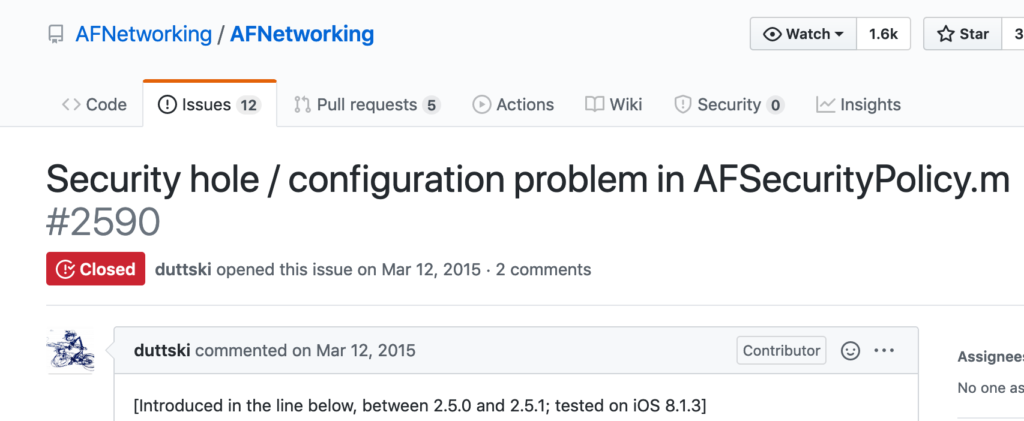

Let’s look at the AFNetworking framework for iOS. One of the many functions this library provides is handling certificate validation logic. Version 2.5.1, released in 2015, introduced a bug that broke its certificate validation entirely.

An update wasn’t put out until about a month later. That left plenty of time for developers to unknowingly integrate the vulnerable version into their apps.

You’d think that this problem is so easy to avoid that it would hardly ever happen – all you have to do is update your frameworks! In reality, developers often will put off this simple task for as long as they can. Why might they do this? Let’s assume that these people missed the news of the security bug. Developers may avoid updating their libraries because they fear that the changes could break something in their code and that they wouldn’t have time to correct it. Another simpler explanation is just laziness.

#3 Insecure Direct Object Reference (IDOR)

While you could argue that IDORs aren’t technically a flaw in a mobile app itself, they can often be found in an app’s REST API. For those who are unfamiliar, PortSwigger offers this definition:

Insecure direct object references (IDOR) are a type of access control vulnerability that arises when an application uses user-supplied input to access objects directly

https://portswigger.net/web-security/access-control/idor

Here’s a basic example: let’s say we’re logged into a messaging app and we intercept a request to this endpoint when we open our inbox:

https://xxxxx.com/messages/inbox/latest?id=1948992We can infer that this call is fetching our latest messages and that ‘1948992’ is our user ID. So what happens if we change the ‘id’ parameter to a different user’s ID?

https://xxxxx.com/messages/inbox/latest?id=37173925A non-vulnerable app would return an error since we are not authorized to read this user’s inbox. But when IDOR is present, the call will succeed and we will be able to read the victim’s messages!

In my experience, IDORs in mobile APIs are just as common, if not more common than they are in web APIs. This trend is most likely due to the misguided assumption that intercepting and inspecting traffic from a mobile app is more difficult than in a browser, which may lead developers to feel that they can spend less time hardening their mobile APIs.

Here’s an interesting writeup of an IDOR discovered in an iOS app a few years ago:

Critical Implementation Flaw Left Major Banking Apps for Android and iOS Vulnerable to Man-in-the-Middle Attacks Over SSL/TLS — Even if SSL Pinning Is Enabled.https://t.co/Ej0jb5REb1

— The Hacker News (@TheHackersNews) December 8, 2017

HSBC, NatWest, Co-op, Santander Were Among Affected Banking Apps.

#2 Client-Side Validation

When the server relies on protection mechanisms placed on the client side, an attacker can modify the client-side behavior to bypass the protection mechanisms resulting in potentially unexpected interactions between the client and server. The consequences will vary, depending on what the mechanisms are trying to protect.

https://cwe.mitre.org/data/definitions/602.html

With these bugs, developers trust that the client has not been manipulated or tampered with. In my experience, client-side validation flaws occur much, much more frequently in mobile apps than in web apps.

Client-side validation is not necessarily a security issue itself – it does have legitimate uses. For non-sensitive operations, and as long as the back-end server can safely handle and reject malformed inputs, there isn’t much risk here.

Problems occur when developers rely on client-side validation for sensitive actions that require some sort of authentication. As a general rule, you should never trust the client alone to make these determinations. It’s better to assume that client apps can be manipulated, which is why it’s so important to handle sensitive authentication operations on the server side.

A typical client-side validation flaw is a PIN bypass. Plenty of apps have their own PIN lock option, and some of those rely on client-side validation to determine whether to grant access or not.

In these cases, bypassing the PIN could be as easy as locating the method responsible for checking if the supplied PIN is correct, hooking it, and changing it to always return ‘true’.

Other frequent targets include free apps that offer an upgrade to a paid ‘premium’ tier. While a vulnerable app may make an initial API call to the back end on launch to determine the user’s tier, it may then store the response from that request locally. Then, say the app later returns to access that value. All it takes to access the premium content for free is a little runtime manipulation – we can change value stored to ‘true’. This type of bug has more of a business impact than a security impact, but it demonstrates the concept well.

The most serious client-side validation flaws in mobile apps usually involve a full or partial authentication bypass. Here’s a great example detailing a 2FA bypass found in Paypal’s mobile app a few years back – the 2FA requirement was only being enforced on the client side! You can also read the accompanying blog post on this finding here.

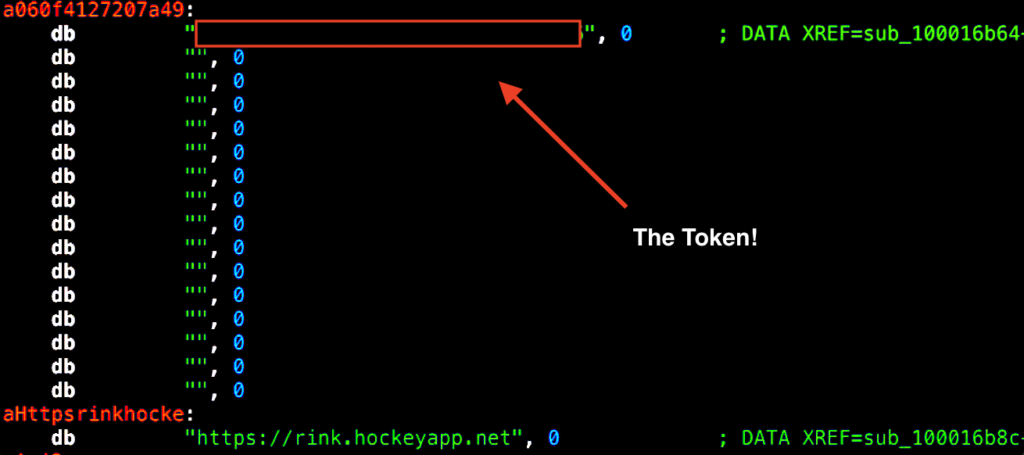

#1 Hardcoded Secrets

Most of you probably saw this one coming. Depending on what has been exposed, the impact can range anywhere from a mild annoyance to a complete disaster. Some commonly leaked secrets include AWS credentials, Google API keys, social network API tokens, and RSA private keys. I wrote about my experience uncovering a pattern of hardcoded, critically sensitive HockeyApp API tokens a few months ago.

Leaked secrets are found just about everywhere, in web apps, IoT devices, Github repos, and more. But again and again, some of the the worst disclosures seem to arise from mobile apps. There are several plausible explanations for this trend.

I believe the primary reason is simple: many people are not aware that mobile apps can be reverse engineered. Most web developers know that the code they’re writing will be publicly accessible and are reminded constantly to be mindful of that. But the same risks in mobile apps don’t seem to be talked about as often. Some developers probably think that the mobile OS itself offers some protection – and that’s true – but it’s not enough to stop someone from perusing the internals of their app. Other times, the developer may simply not have realized that they were working with sensitive information, though this usually involves lower impact disclosures.

These vulnerabilities should give you a sense of the current trends in mobile security. In addition to simply knowing about these flaws, I hope you’ve gained an understanding of why they happen so often. Putting yourself in the mindset of a developer if you’re a pentester – or of a hacker if you’re a developer – will lead to more creative thought and ultimately to uncovering or counteracting more security flaws in the future. Thanks for reading!